Recogni – Synergistic ML-Silicon Co-design

Written by Gilles Backhus, Co-Founder and VP of AI, Recogni

Recogni is a startup founded in 2017 on a mission to bring the best Perception compute technology to the automotive ADAS/AD space. As of summer 2022, were are proud to say that our first silicon is the fastest, lowest-latency and most energy-efficient ML-vision chip out there, beating competition by a factor of 3 to 10x.

In this article we would like to share with you some of the principles that helped us build an engineering organization that enables the deep synergy of two (or more) distinctively different engineering disciplines to work tightly together for the co-design of a radically innovative ML-silicon technology – ML-silicon co-design here being a special sub case of the known field of HW/SW co-design.

Additionally, over the next weeks and months, we will release a series of tech articles that will further disclose how we developed our technology and explain how we were able to achieve our performance.

Now, let’s first cover how Recogni is structured for an overview.

We have always had two homes: San Jose in California, and Munich in Germany. The first has silicon and HW design, firmware software and data capture/creation covered, whereas the latter takes care of all ML-related SW, including the compiler and all necessary SW infrastructure from datasets to compiled perception stacks.

At first glance, it’s not hard to realize how such a setup would be the right approach to build the best possible tech for automotive Perception. But over the last 5 years we learned that certain topics deserve extra attention to make it work. Hence, I will elaborate on a few core principles one should be deeply aware about in such an environment, if the plan is to have these two “worlds” work closely together, instead of putting abstract barriers/interfaces between them as it is the case in bigger companies (for some good, and some questionable reasons).

The two engineering personas (silicon, and ML) can be described in essence as follows:

- Our typical silicon designer is an experienced engineer who has successfully designed, tested, and brought up many chips in their career – and is extremely well sensibilized for the “one shot” nature of their work. The consequences of major errors are costly in many ways as silicon design costs and the precious time spent over additional iterations imposes an immense burden on startups.

- In contrast, our typical ML designer is someone with 0 to 5 years of experience after graduation, accustomed to a very organic, explorative and “forgiving” development philosophy.

In a nutshell: In some fundamental ways they are quite different. However, bridging these two varying disciplines into one coherent engineering team, working in synchronicity, proved to be the very basis for the ability to develop a new piece of ML silicon much faster and much more innovatively than bigger corporates.

Determinism

The first core principle I’d like to raise awareness about is the degree of (perceived) determinism one associates with an engineering discipline. Or, another way to put it is: How “sharp” or confident is the predictability of a new task’s achievability (a new feature, a new circuit, anything…).

There are some engineering disciplines that operate within a world of high degrees of “felt” determinism: Full-stack SW engineering, standard CMOS chip design, PCB design, just to name a few that are relevant to us. Basically, when there is something new to do, it is possible for an experienced engineer (ing manager) to confidently predict a timeline of execution. “I have done this before.”, “This guy has implemented it on reddit.”, and so on. This does not mean the task is simpler to achieve and it could still be very challenging to complete, requiring a very skilled engineering mind to execute it. But at least one would have a much clearer idea of how long it takes to complete a task, what are the dependencies, and where are the critical paths.

On the other hand, there are engineering disciplines (such as ML engineering) that work day-to-day with a lot more acceptance for, and acknowledgement of, a general feeling of non-determinism. Of course, a neural network stack is technically deterministic (same input will result in same output) as it is essentially one huge formula. But it manages to hide its determinism and interpretability very well under its sheer ungraspable complexity and seemingly arbitrary behavior. The result is, as mentioned above, a very iterative and “curious” thinking process. Not by choice, but by necessity.

Eventually, overcoming this potential source of challenges boils down to empathic interaction. If both sides have a high degree of empathy for the nature of the other’s world, then highly synergistic and cohesive co-design is possible.

Communication

Certainly, this is not the first article to state the obvious importance of good communication. But in our case, we learned that a few certain aspects should be emphasized.

Over-communication. When dealing with people who think very differently compared to yourself about the thing you collectively work on, it is important to repeat, repeat, and repeat. Just because you mentioned the meaning of an acronym 3 weeks ago in a standup, it does not guarantee that your colleague from the other discipline will have internalized its theoretical, and especially its practical meanings. Even a simple word such as ‘segmentation’ will mean completely different things to a chip designer versus an ML engineer. Of course, context eliminates confusion in most situations. But it is essential in closely inter-twined cross-disciplinary engineering teams to take a step back and really try hard to put yourself in the position of the other person.

Another challenge is of psychological nature: Since repetition may appear unnatural and almost condescending to the one repeating the message (interestingly, rarely to the receiver!), one needs to fight the urge to avoid what might seem like an implicit confrontation or insult. The uncompromised no-ego end-goal should always be clarity.

And the other one is: Treat your internal documentation as a product. It starts already at the on-boarding of new team members. If you want to set the tone right off the bat (which is when it should happen to avoid any later bias or confusion) for a very cooperative and highly inter-twined way of working, then rock solid documentation of “the other world” needs to be easily accessible. And more than that: Treat your colleagues of other disciplines like a close customer. Serve, empathize, collaborate.

Management

Coming back to the challenges associated with the non-deterministic nature of ML engineering, it is important to look at what this means for overarching project management.

Many product development cycles will contain critical paths consisting of tasks from a range of different disciplines that naturally might not synchronize well. This poses a challenge since precisely timelined productization does not pair well with ML’s unavoidably unpredictable need for iterative, explorative innovation. It is not uncommon that an ML project’s complexity suddenly balloons up from an easily-done-in-2-weeks incremental model retraining to a new from-scratch project with many aspects to be researched first over months.

Solving this requires two ingredients: Project management with profoundly appreciates the respective natures and challenges of all disciplines involved, as well as a slightly more conservative-than-usual project timeline that accounts for significant disturbances in critical paths.

Second, technical leads, who are managing mixed teams with ML engineers as a part of it, need to understand one thing: The devil often entirely lies in the detail. Hence, taking baby steps and reflecting on the challenges that come with abstraction layers are crucial principles to internalize.

Imagine for instance the silicon team wants to evaluate the value of writing instruction support for a new type of neural network architecture. What shall the ML team do? Test for its benefit. Of course.

Let’s say after having found a remotely related paper with code (that of course did not trivially reproduce the advertised performance) you have derived from it the actual ML stack that you think your product needs and your technology is able to execute efficiently and effectively. At any of these stages a single out of maybe 100+ hyperparameters might completely mess up your model performance and send you on a wrong path due to faulty conclusions. Only once you finally change that one regularizer loss co-efficient from 0.01 to 0.002, everything suddenly magically works. Welcome to the ML world. The solution is to take baby steps – which many folks (either those new to ML, or those who have spent their last several years in more deterministic and humanly graspable engineering disciplines) highly underestimate. If you start big right away, you will get drowned in debugging. Most of the time, the quickest path to ML success is to start small.

This brings us to the next pitfall: Abstraction.

Abstraction is necessary for any serious product-level ML development environment. It enables scalability. But it comes with the often-underestimated risk of wrongly implied automatism and genericness. The reason why above-mentioned hyperparameter might have been set to 0.01 could simply be because some regularizer module that’s been part of the environment for a long time, and its users had started assuming “it just works” since that had literally been the case for months or years. The awareness for its highly significant sensitivity had faded away over time. Plus, it is economically unfeasible to always hyperparameter-search your way through every single one of them every time you want to develop something new. Constant reflection on the level of adaptability of all abstraction layers, preferably supported by a range of randomized, recurrent, automated end-to-end “unit tests”, helps with minimizing these risks.

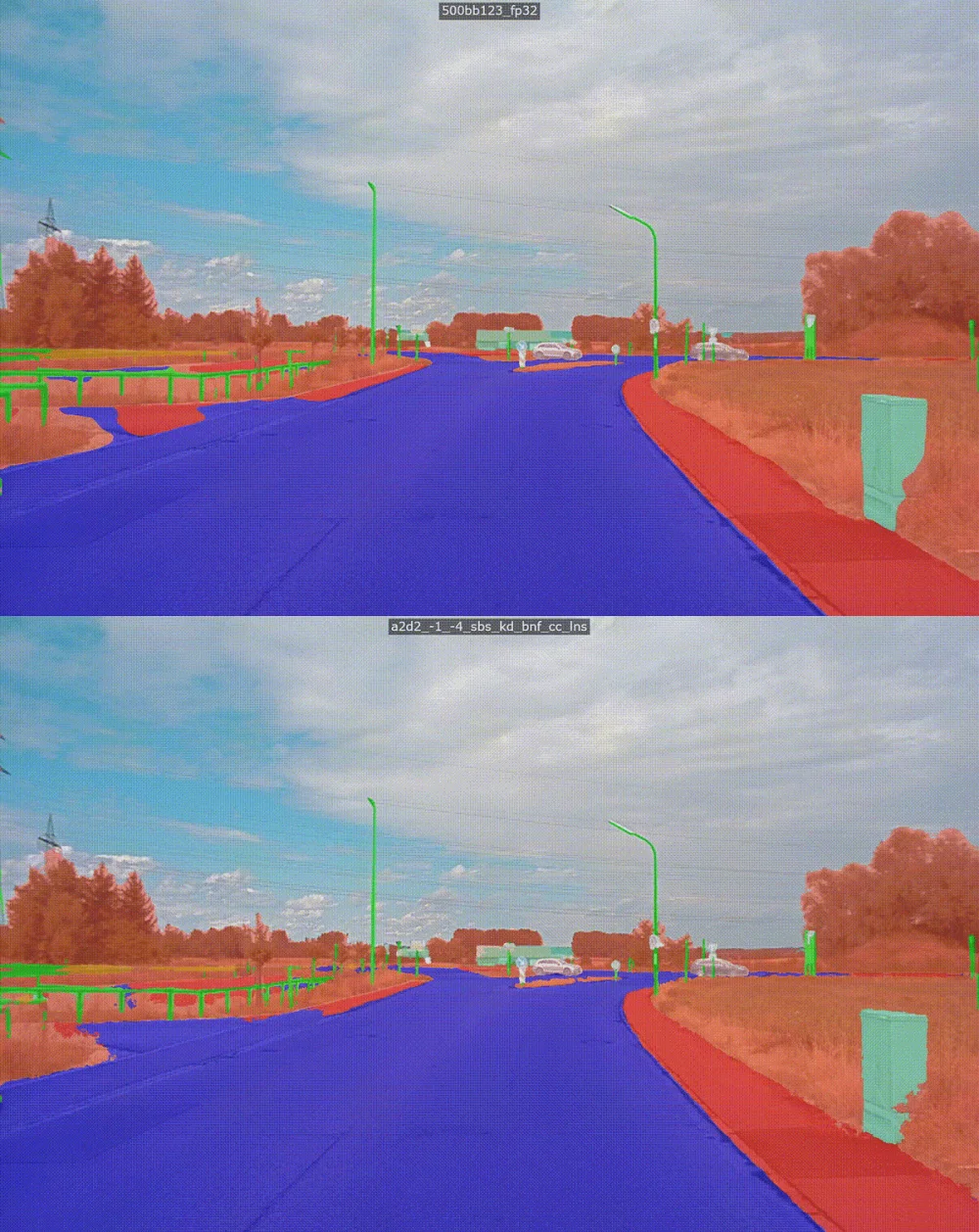

Lastly, Recogni is among the most “end-to-end” ML silicon startups out there. To many people this may seem like overwhelming engineering with way too many moving parts and appearing as not being focused on any single aspect of the overall stack. But there is a good reason why we very consciously and intentionally decided to be a company that builds end-to-end reference systems from sensors in a car, self-captured data, chip design, neural network design, compiler tool chain, visualization etc.

Not only does this allow our core teams to develop their respective parts of the stack in the context of an end-to-end system instead of in isolation, enabling them to observe the system-level implications of their work. Or to show our customers what can be achieved with our vast amounts of compute.

But more than that: It creates a shared narrative for our teams internally.

For instance, integrating an image sensor as part of an actual reference system should, in theory, not be a hard requirement for a company that in essence wants to develop a chip for machine learning processing. But it establishes a common ground: For the chip designers the image sensor is a piece of embedded electronics that they must physically and logically interface with, whereas for an ML perception engineer it is the source for their neural network’s input layer. Eventually, it serves as a basis for empathic interaction.

-

We hope this article gave you a meaningful insight into some of the principles and practices that allowed us to build our engineering organization and continue to expand it.

As previously mentioned, we will be sharing further insights into our technology stack in the upcoming weeks.

If you have any questions or just want to generally get in touch, please feel free to contact us at contact@recogni.com.