Why is so much compute needed for Autonomy?

By Manish Singh, VP of Marketing

Aside from their use in the popular series Westworld, autonomous vehicles (AVs) have many benefits, the most prominent of which is the promise of safety. AVs will eliminate the dependency of the human driver in terms of attention, perception, and reaction time. Subsequently, in terms of safety improvements, AVs will reduce accidents, which accumulate to an economic loss of over $871B annually and approximately 40,000 deaths. AVs will also improve safety for pedestrians, bicyclists, motorcyclists, and scooter riders. The ability of AVs to operate in both highway and surface street settings is dependent on their skill to recognize and understand their surroundings so that they can make real-time decisions to navigate the roads.

An 18-year old is eligible for a driver’s license in the US and is able to traverse any road type at any time, regardless of weather constraints. This begs the question — why is it so difficult for a set of state-of-the art GPUs or CPUs to accomplish what an 18-year old can accomplish so easily?

One of the main issues is the ability of the technology to mimic the human vision cognition system — driving is 90% visual. Vision encompasses an extraordinary range of abilities. We can see color, detect motion, identify shapes, gauge distance and speed, and judge the size of far-away objects. We see in three dimensions even though images fall on the retina in two. The “machinery” that accomplishes these tasks is by far the most powerful and complex of all the sensory systems. The retina, which contains 150 million light-sensitive rod and cone cells, is often considered an extension of the brain. In the brain, neurons devoted to visual processing are in the hundreds of millions and take up about 30 percent of the cortex, a large chunk when compared to 8 percent for touch and just 3 percent for hearing. Each of the two optic nerves, which carry signals from the retina to the brain, consists of a million fibers; in contrast, each auditory nerve carries a mere 30,000. It is far easier to reverse engineer the human auditory system than it is to mimic the human vision system. As such, human auditory systems have already been commercialized for consumer and health markets.

Vision, of course, is more than just recording what meets the eye. It also encompasses the ability to understand, almost instantaneously, what we see, and this action happens in the brain, which has to construct our visual world. Confronted with a barrage of visual information, it must sort out relevant features and make instant judgments about the meaning. It has to guess at the true nature of reality by interpreting a series of visual cues, which help distinguish near from far, objects from background, and motion in the outside world from motion created by the turn of the head.

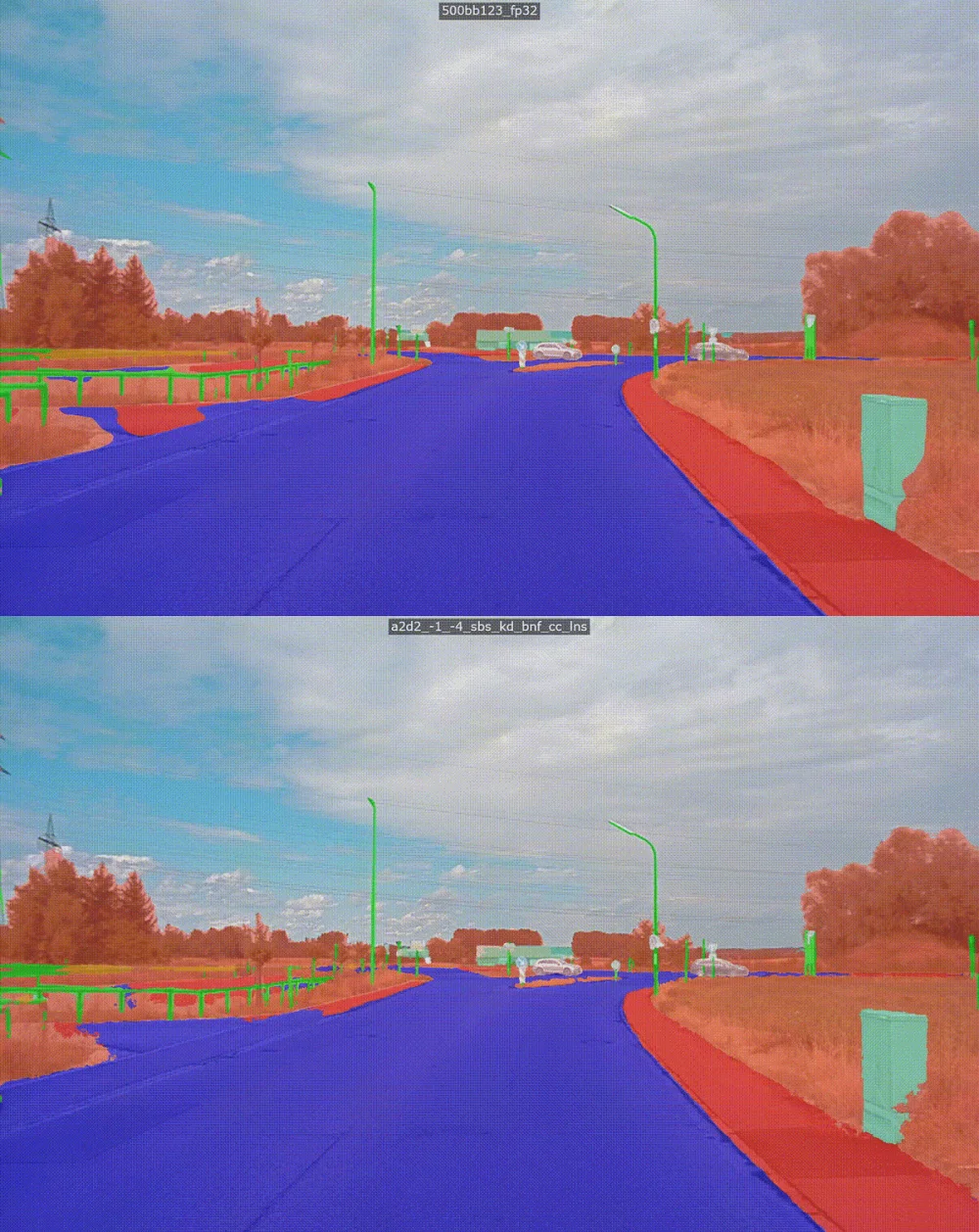

To meet autonomy requirements, we need legacy-free, purpose-built technology, designed to address the extremely demanding acquire-analyze-act requirements of perception processing. General purpose solutions cannot resolve these conflicting requirements. For example, to process high resolution, high frame rate camera input, we need to deliver a processor with one PetaOp compute, support the ability to process incoming 8 MPixel camera frames in 16mS, all while maintaining a minimal power consumption envelope of 10W.

In order to create a holistic autonomy solution based on AI, we must replicate the human driver’s process of training and inference. Just as we train people to prepare for all eventualities and scenarios while driving, we need to train the AI system in a way that delivers learning-driven inference for scene processing and object recognition capabilities. The inference system in the vehicle needs to execute this vision cognition inference while taking into account multiple conflicting performance and power consumption requirements. Moreover, the training process must not only be holistic in terms of scene and object coverage, but it must be cognizant of optimizations for the inference system. Specifically, to this point, Recogni has a portfolio of patented strategies for its inference systems, and its training process is tuned for this portfolio of technologies.

Some of the key benefits of this approach include accurate, real-time scene analysis by a system that never gets tired, distracted, or inebriated, and also mitigates most dangerous accidents (head-on collisions) and protects the Vulnerable Road Users such as pedestrians, bicyclists, motorcyclists, scooter-riders etc.

For a company to even attempt to deliver this product, it needs to execute a multi-disciplinary approach and build a multi-disciplinary team of technical expertise in camera systems, vision processing, AI algorithms, semiconductors, and systems. Recogni is unique in this sense, and our disparate expertise and abilities are being harnessed to bring our game-changing product to the market.